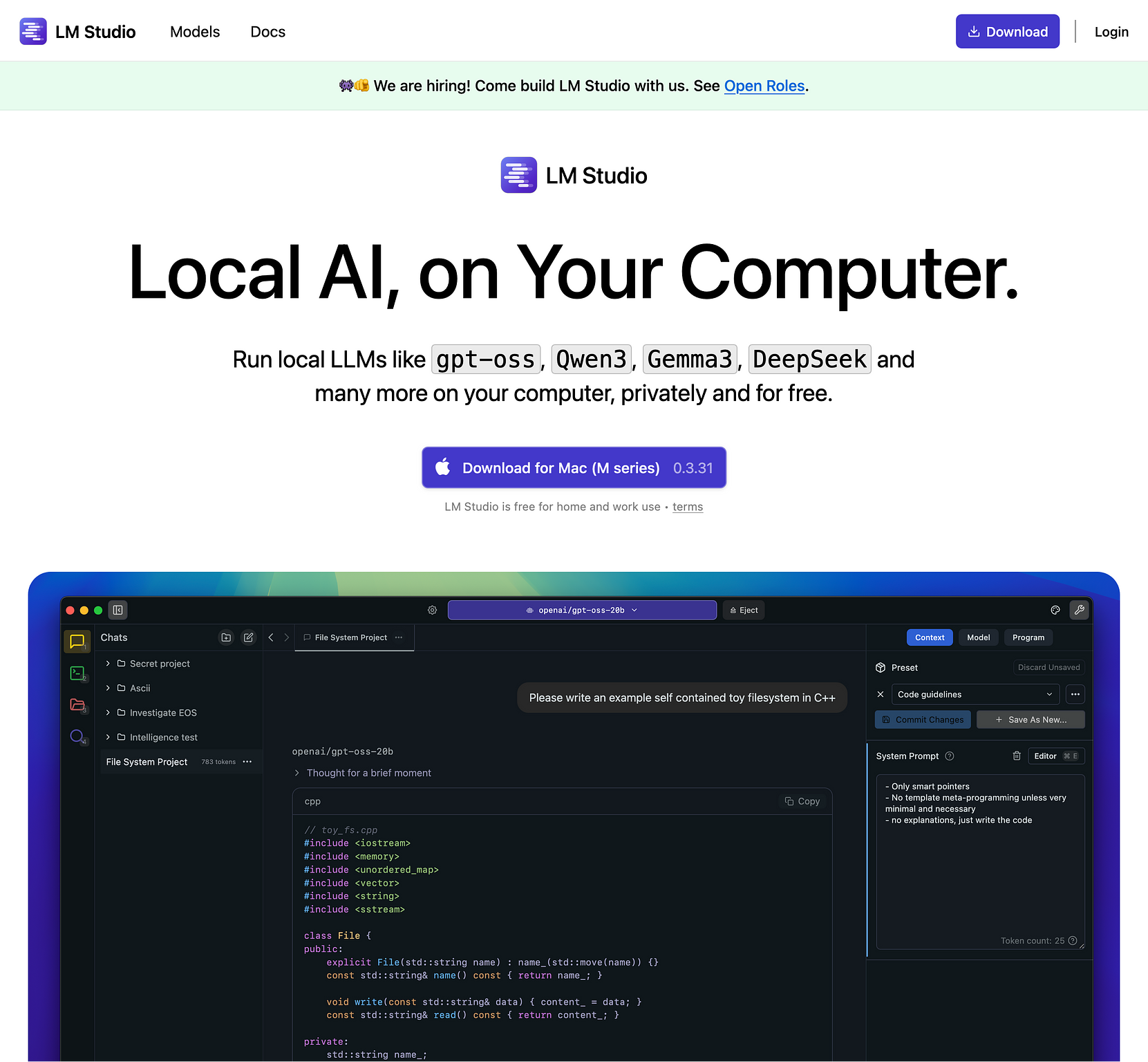

LM Studio

Run local LLMs like gpt-oss, Qwen3, Gemma3, DeepSeek and many more on your computer, privately and for free.

LM Studio is the app I now use for running local LLMs.1 In LM Studio I mainly use gemma‑3‑12b, mostly for alt‑text generation, and the gpt‑oss‑20b model. Both run fine on my Mac equipped with 32 GB of RAM.